On Duality

The other half of the universe comes for free.

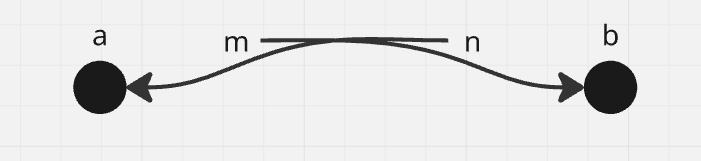

I've just finished writing the addendum to the previous post, including the bidirectional mint. I've spent around an hour in pure thought about the fact that the two arrows in that construction flows always into the nodes themselves. For reference, here’s the mint in question:

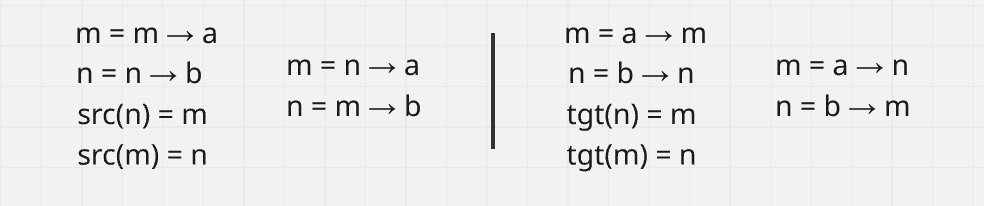

This isn’t the most intuitive, as we might want to find out where we can flow from a or b, and not see this arrow at all. To mitigate that, I’ve thought about inverting the arrows, but for some reason feared that this somehow breaks the mint. But, weave takes a lot from category theory, and the idea of duality always producing something correct even if useless prevails, so let’s try it. Here’s the two constructions side by side:

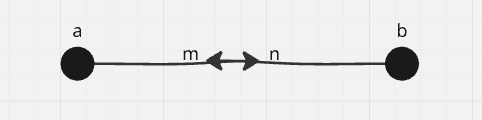

Basically, every source and target relation is reversed, and the result is familiar.

I confess that what we have here is more desirable-seeming than the original, if for no other reason, then for the fact that it’s a bit more welcoming for traversal from each end. The traversal itself is the mirror image of what we had before: this time, we take src(tgt(arrows_out(·))) and it produces desirable results:

src(tgt(arrows_out(a)))

= src(tgt([m]))

= src([n])

= [b]

src(tgt(arrows_out(b)))

= src(tgt([n]))

= src([m])

= [a]

The dual identities peculiarity from before is upkept: the target of each of the arrows is the other arrow, but their sources are coming from outside. If we have a normal arrow r = a → b, assuming that a and b are knots, the following happens when we use this construction to traverse it:

src(tgt(arrows_out(a)))

= src(tgt([r]))

= src([b])

= [b] ✓

This is, in a way, better than what we had with hop in the last post:

tgt(src(arrows_in(a)))

= tgt(src([r]))

= tgt([a])

= [a] ✕

It turned out that hop isn’t good at traversing normal arrows, but this new hop is!

Duality is a fast way to see if there’s more to a space. Sometimes, it turns out that the dual space of an already useful concept is even more useful, just as it happened here. Not all dualities are obviously useful, but it’s a great exercise, especially for something like what we’re doing here: I feel like I only have a budding understanding of what’s better when comparing two concepts. Here’s what that feels like…

If given two entities A and B, assuming that they offer similar answers to the same questions, I’d say A is better if there’s a shorter path to the answers, and one that is algorithmically more conservative. Since we’re working with graphs, algorithmic complexity is a tense question, so sprewing up more structure instead of data helps. That’s why knots, which are informationally redundant, aren’t optimal: they show that we’re leaning too hard into algorithms, and weave sort of promises that it might be best to try a different route. There’s an adjacent topic to this regarding whether more structure is necessarily more space as opposed to more time, which I think is very hard and not at all obvious. You know it’s bad when the main argument for why not is “there’s this thing in physics called time crystals, I think we should look into them”.